DNS is one of the most high-volume data sources. Yet security teams can’t ignore it, as it is a must-have source when it comes to investigating malware, C2 traffic & data exfiltration. Actively monitoring and investigating DNS in conjunction with other security data sources, is a sign of a more advanced/mature security program.

Today, let’s walk through how a Query customer scaled their program to do exactly that. The Query Security Data Mesh integrates DNS data into your SIEM so that you can run investigations and detections without increasing your SIEM licensing costs.

Customer Environment and Use Case

This is a modern organization with their infrastructure entirely in AWS, with multiple AWS accounts broken out both by function and region, including multiple international regions. The CISO’s goal was to expand the security team’s visibility into DNS queries, resolutions, and malicious lookups. While ingress-egress traffic was the primary goal, investigating the higher volume east-west lookups was a desired stretch goal as well.

This organization has been using Splunk for years as their primary SOC tool. Given the team’s current practices, investments, and skill-sets, a choice had already been made that analysts would be using Splunk for their investigation. The CISO had a limited budget for the project so the standard path of indexing DNS data into Splunk was not possible because of the massive volume increase the project would have caused. This is when the team went back to the design board, exploring solutions.

Security Data Mesh Architecture with Query

The team had heard of data mesh architectures and just some googling landed them at Query’s website. Upon understanding Query Federated Search and getting a demo of the Query Splunk App, how it enables investigations from Splunk without needing to index data into Splunk, they wanted to do a pilot project.

Scoping the Pilot Project

The organization defined a pilot project with their lab regions to functionalize:

- Route 53 Resolver Query Logging

- Amazon S3 based data lake for centralization and archival.

- Note that Amazon Security Lake is the built-in option from AWS but this customer chose to self-manage into Amazon S3.

- Query Data Mesh Connector to query Amazon S3 via Amazon Athena

- Analysts don’t leave Splunk Console.

- All investigations via SPL in Splunk Search and/or via Dashboards (enabled via Query Splunk App)

- Detections authorable/customizable by the analyst and run in Splunk

- DNS-based threat intel feeds for enrichments

DNS (Route 53 Resolver) Logging

The customer enabled Route 53 Resolver query logging in all the AWS accounts participating in the pilot. That captured all DNS queries made by their VPCs as well as their responses. For detailed steps, please refer to AWS documentation at Resolver query logging – Amazon Route 53.

Sample raw log JSON:

{

"srcaddr": "4.5.64.102",

"vpc_id": "vpc-7example",

"answers": [

{

"Rdata": "203.0.113.9",

"Type": "PTR",

"Class": "IN"

}

],

"firewall_rule_group_id": "rslvr-frg-01234567890abcdef",

"firewall_rule_action": "BLOCK",

"query_name": "15.3.4.32.in-addr.arpa.",

"firewall_domain_list_id": "rslvr-fdl-01234567890abcdef",

"query_class": "IN",

"srcids": {

"instance": "i-0d15cd0d3example"

},

"rcode": "NOERROR",

"query_type": "PTR",

"transport": "UDP",

"version": "1.100000",

"account_id": "111122223333",

"srcport": "56067",

"query_timestamp": "2021-02-04T17:51:55Z",

"region": "us-east-1"

}The above captures key information such as the originating VPC ID and AWS Region, the origin instance’s ID and IP, the requested DNS name and record type. The DNS response data captures the IP address that is returned in response to the query, along with the response code, such as NOERROR or SERVFAIL.

Centralized S3 Logging Across Accounts/Regions

The security team had an AWS account designated for centralized logging into S3 buckets and they set up the necessary permissions for the other accounts to write to that bucket. See steps at AWS resources that you can send Resolver query logs to – Amazon Route 53.

For cost-effective storage and querying, the customer used Apache Iceberg format with parquet files partitioned by account, region, and date. S3 lifecycle policies were applied with 30 days in Standard, and further 60 days in Glacier. Here is a detailed and deeply technical reference on Amazon Athena and Apache Iceberg for Your SecDataOps Journey.

Note that while this customer chose Apache Iceberg, good alternatives like Delta Lake and Apache Hudi exist. For understanding choices and technical details, please refer to Have a Security Data Lake on Amazon S3? Read This Blog and/or Optimizing Delta Lake Security Data Lakehouses.

Enabling Query Data Mesh To Access S3 via Athena

The customer then made the above S3 data queryable/accessible to our Data Mesh via Athena, following the documentation at Amazon Athena (for Amazon S3).

Note that with the data mesh architecture, data stays in place and does not move. It is accessed live on-demand and only answers/query results are sent. Results are ephemeral and discarded once the user session is over, although the user can choose to store them in another location such as a Splunk summary index.

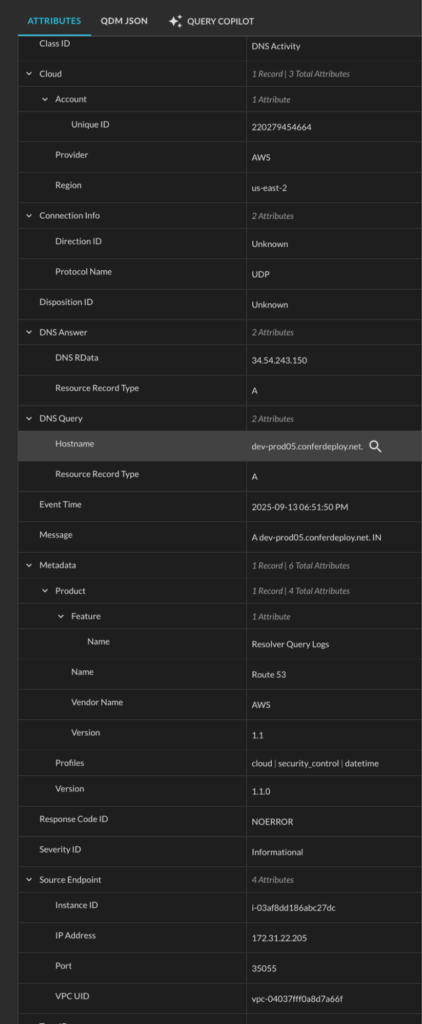

Normalization Into OCSF DNS Activity

The data mesh applies two-way OCSF normalization – on query conditions, and on the results, on their way out. This is while the S3-stored data remains in the native raw format (see above sample). This approach was considered beneficial by the customer as (a) it lowered compute costs of transforming the entire big-data at insert-time, and (b) from a data integrity and regulatory compliance perspective. Open Cybersecurity Schema Framework (OCSF) is a hierarchical data model – learn more about it by reading the Query Absolute Beginner’s Guide to OCSF.

Query has a Copilot-assisted schema configuration wizard that applies the normalization automatically, but the customer admin has the opportunity to review and customize mappings. Above Route 53 Resolver logs were mapped into OCSF DNS Activity by the Copilot.

Here is how a Route 53 Resolver query log-entry looks like in OCSF DNS Activity:

Searching From Splunk

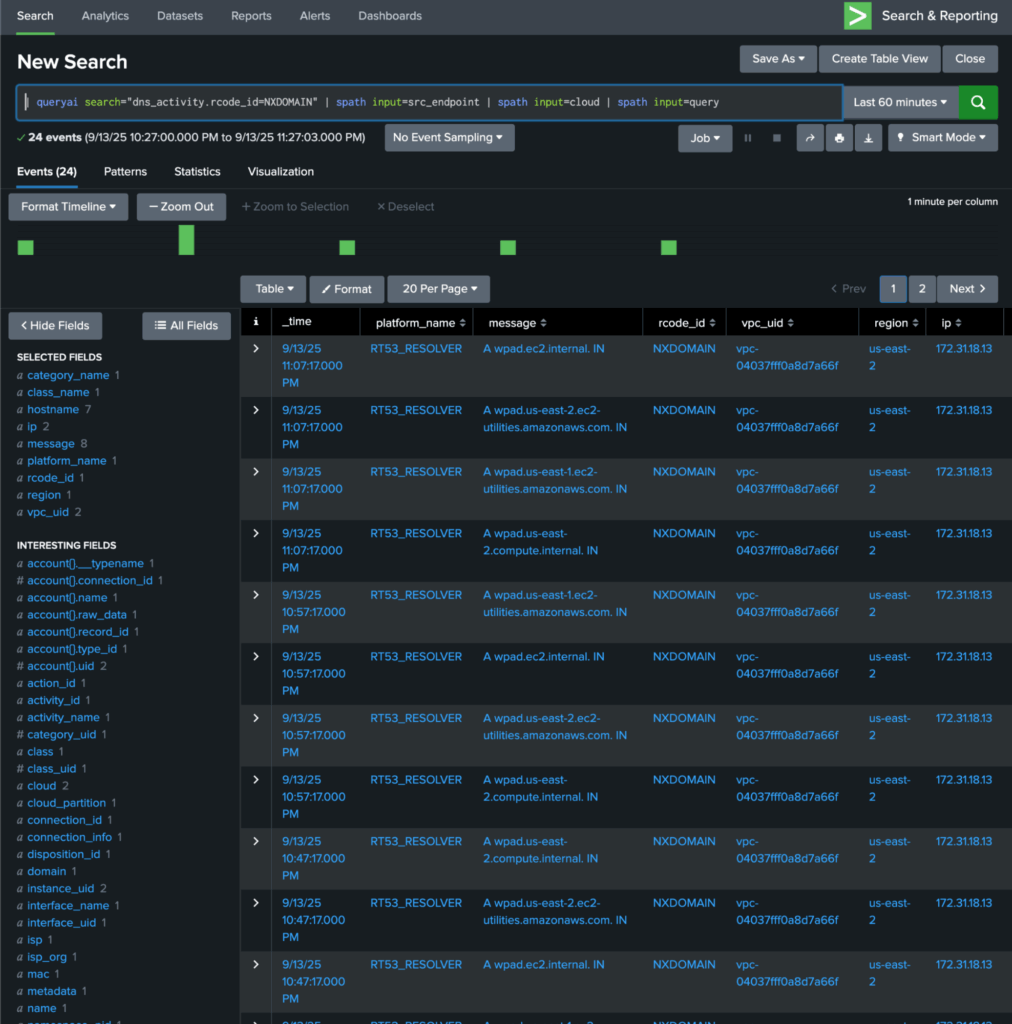

The customer’s Splunk admin enabled the Query Splunk App following this guide. The app made it possible to run federated searches on remote data sources, without needing to index into Splunk. The app extended SPL with | queryai command for searching, and yet, it allowed their Splunk users to continue to run their standard SPL pipeline commands. For example, here is how they searched for DNS queries that resulted in NxDomain:

| queryai search="dns_activity.rcode_id=NXDOMAIN” | spath input=src_endpoint | spath input=cloud | spath input=queryThe … | spath input= help with extracting the input object’s sub-fields.…

If needed, users could save focused results into a summary index with the … | collect … SPL command.

Here is how the tabular results of the above NXDOMAIN look (NOTE: customer data is not displayed here…this is a representative screen-shot from our lab data):

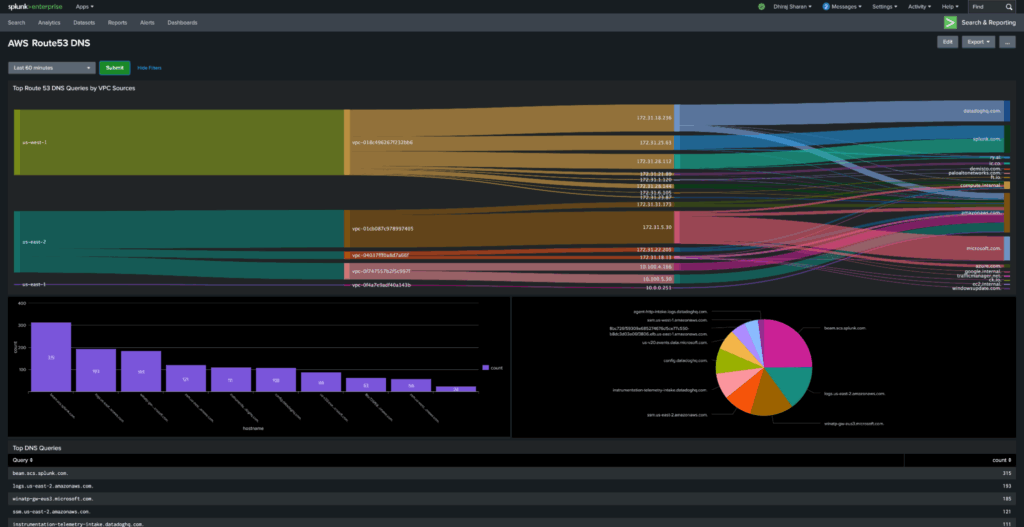

Investigative Content for Splunk

Query also provided the customer with some relevant content to enable investigative views needed for their analysts’ workflows.

For example, the customer can monitor top Route 53 queries by VPC source here:

The customer called the pilot a success as we already achieved the key goal with above progress. We continued further to prove out the stretch goals of the pilot as well, as we will cover in the next section.

Enabling Custom Detections in Splunk

One of the stretch goals was to enable detections in Splunk for the customer’s use-cases. For other data sources present in various Splunk indexes, the customer was already using several detections authored and maintained by Splunk’s Threat Research team – see https://research.splunk.com/detections/. These detections were based on Splunk’s Common Information Model (CIM).

Here is an example of how we converted a detection from CIM (requires Splunk ingestion) to OCSF, operationalized with the above remote S3 data source, i.e. without requiring any Splunk indexing.

This particular detection Detection: Ngrok Reverse Proxy on Network | Splunk Security Content alerts on unauthorized usage of Ngrok reverse proxy. Here is how it was enabled using Query Federated Detections capability:

| queryai search="dns_activity.query.hostname IN ('*.ngrok.com', '*.ngrok.io', 'ngrok.*.tunnel.com', 'korgn.*.lennut.com')"Pilot Met and Exceeded Success Criteria. What’s Planned Next.

Now that the pilot and the production phase-1 has been a success, here are the upcoming implementation phases now possible with the Query Security Data Mesh:

- Expand DNS monitoring to all AWS accounts and regions

- Add MISP connector to correlate DNS lookups against MISP IOCs

- Onboard a subset of VPC Flow Logs via the same approach

- Expand to public DNS query logging (see Public DNS query logging – Amazon Route 53)

- Move other high-volume network data Splunk indexes out of Splunk to S3, to reduce Splunk licensing costs.

Summary

In this Query Customer Success story, we learnt about the customer environment and the problems they wanted to solve – expand visibility into AWS Route 53 Resolver DNS queries being made across multiple regions in their multiple AWS accounts. The business need was to monitor for malware activity but without increasing Splunk costs. Splunk was the primary SOC tool that the security team wanted to use. The customer ran a pilot of Query Security Data Mesh and met their use-case needs while exceeding the security team’s organizational goals.

For more information on above, please reach out.